Stripped by an Algorithm: The Global Crisis of AI Undressing Tools Abuse and the Laws That Haven’t Caught Up

A moment that keeps repeating

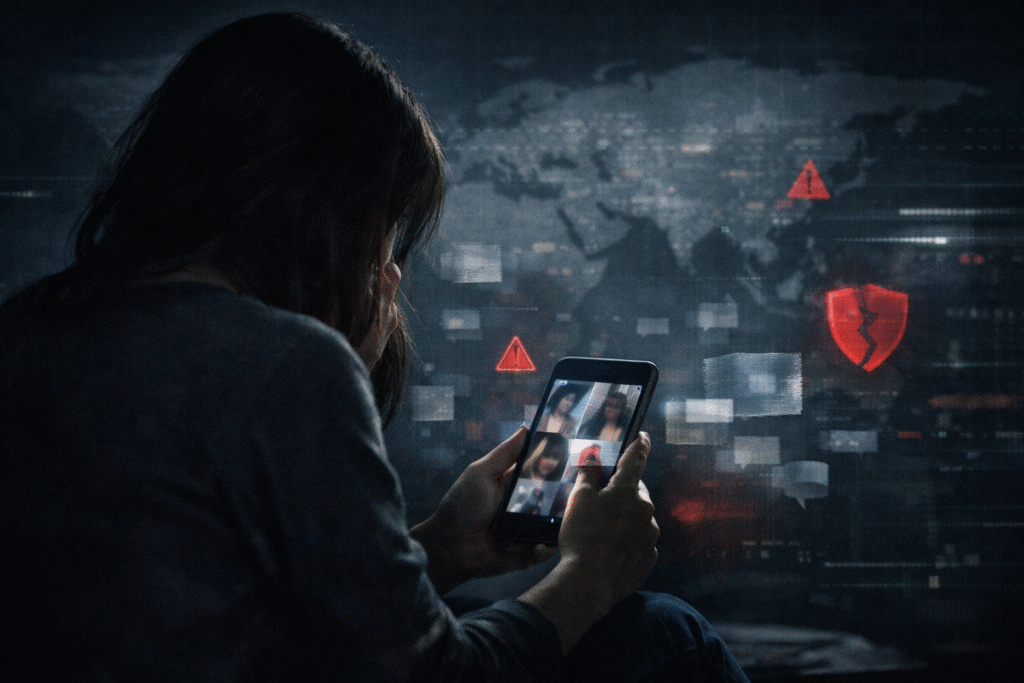

The first message arrives late at night. A friend writes, asking if she is “okay.” Then another. By morning, a blurred screenshot circulates in a private group chat: a photograph taken from her public social media profile—smiling, fully clothed—has been transformed into a hyper-realistic nude. She did not consent. She did not pose. She did not even know such a transformation was possible.

Within hours, the image jumps platforms: from a closed messaging group to a forum, then back into her mentions as anonymous users taunt her. Reporting links are buried; responses are automated. The damage—psychological, reputational, professional—has already begun.

This scene is no longer rare. It is emblematic of a fast-growing global phenomenon known as AI “ undressing tools ” or “nudification tools”: the use of generative artificial intelligence to fabricate non-consensual sexual images from ordinary photographs, most often of women and girls.

What “AI undressing tools” are—and why experts call it sexual violence

AI undressing tools / AI nudification tools take an existing image—often scraped from public timelines—and algorithmically generate a false nude body beneath the clothing. Unlike novelty filters, these outputs are designed to look plausibly real, frequently indistinguishable at a glance from genuine intimate photographs.

Digital rights scholars and gender-violence researchers increasingly classify this practice as technology-facilitated sexual violence or image-based sexual abuse, not a prank. The reasons are clear:

- Absence of consent: the core harm mirrors that of non-consensual intimate image sharing.

- Sexualised coercion: images are used to humiliate, threaten, silence, or extort.

- Lasting impact: even when faked, the social consequences for victims are real and durable.

As one cyber-crime investigator put it in testimony to lawmakers, “The body in the image may be synthetic. The harm is not.”

Scope and scale: a fast-moving global problem

While precise global counts are difficult—many victims never report—credible research points to explosive growth:

- A 2023–2024 synthesis of NGO monitoring reports and academic studies found hundreds of thousands of non-consensual ( use of AI undressing tools )AI sexual images circulating across platforms, with year-on-year increases exceeding 300% in some datasets.

- Studies consistently show women and girls constitute over 90% of known victims, with disproportionate targeting of minors, journalists, activists, educators, and public figures.

- Regional patterns differ, but cases have been documented across North America, Europe, South Asia, East Asia, Latin America, and Africa, underscoring that this is not a problem confined to a single country.

Crucially, researchers warn that visibility lags reality. Encrypted messaging, invite-only groups, and anonymous forums hide the true scale from public view.

How the technology works—and why it spreads so easily

At a high level, the mechanics are simple enough to be dangerous. Modern image-to-image AI models learn statistical patterns of human bodies from vast datasets. When given a clothed photo, the system predicts what might exist beneath—producing a fabricated result that aligns with cultural expectations of nudity.

The spread is enabled by architecture, not just intent:

- Public photos supply abundant raw material.

- Encrypted messaging apps allow rapid redistribution beyond moderation systems.

- Anonymity and cross-border hosting make perpetrators hard to trace.

Detection and takedown remain technically and operationally difficult. As an AI safety researcher told a parliamentary committee, “Once an image is generated and re-encoded, it often evades hashing systems designed for known abusive content. Platforms are playing catch-up.”

The law: fragmented, outdated, and often silent

Governments have responded unevenly—if at all.

Where laws exist

A small but growing number of jurisdictions have enacted explicit bans on deepfake sexual imagery, treating it as a criminal offence regardless of whether the image depicts a real nude body.

Where old laws are stretched

Many countries rely on obscenity, defamation, or harassment statutes written long before generative AI. These often fail to address fabrication, consent, or platform liability, leaving prosecutors uncertain and victims unprotected.

Where there is nothing usable

In dozens of countries, particularly in parts of the Global South, no clear legal pathway exists. Victims are told the image is “fake,” therefore not illegal—an argument human rights advocates say fundamentally misunderstands the harm.

Common gaps include:

- No legal recognition of AI undressing tools or AI nudification as sexual abuse or gender-based violence.

- Weak or absent penalties for distributors and repeat offenders.

- Near-impossible cross-border enforcement when creators, platforms, and servers sit in different jurisdictions.

Platform responsibility: policies on paper, failures in practice

Major social media companies say they prohibit non-consensual sexual imagery, including AI-generated content. In practice, survivors and watchdogs describe a different reality.

Investigations by civil society groups have documented:

- Delayed responses to urgent takedown requests.

- Inconsistent moderation across languages and regions.

- Limited survivor support beyond automated acknowledgements.

Companies such as Meta publicly endorse safety measures like image hashing and reporting tools, while platforms used widely in private sharing—such as Telegram and Signal—face ongoing scrutiny over how abuse proliferates in closed spaces. Short-form video platforms, including TikTok, have expanded policies, but enforcement remains uneven, according to regulators and NGOs.

As one European regulator noted in a briefing to Europol, “Voluntary measures without accountability will not keep pace with abuse that scales at machine speed.”

Human impact: when “fake” images cause real harm

Survivors describe profound consequences:

- Psychological distress: anxiety, depression, panic attacks, and social withdrawal.

- Reputational damage: rumours linger even after takedown, especially in tight-knit communities.

- Material loss: jobs lost, classes missed, political campaigns abandoned.

In conservative societies, stigma multiplies the harm. Victim-blaming—“Why was her photo online?”—silences reporting and pushes abuse further underground.

Gender, power, and why women are targeted

Experts emphasise that AI undressing tools / AI nudification does not emerge in a vacuum. It amplifies existing inequalities.

- Women’s bodies have long been treated as sites of control and punishment.

- Public visibility—whether as a politician, journalist, or student leader—raises risk.

- The threat of sexualised humiliation becomes a tool to discipline women back into silence.

Human rights advocates argue this makes AI nudification a digital extension of offline gender-based violence, demanding comparable urgency.

International responses: beginnings, not solutions

Global institutions are starting to respond. UN-affiliated experts have warned that deepfake sexual abuse threatens women’s rights to privacy, dignity, and participation in public life. While no binding global treaty exists, emerging guidance frames AI-enabled sexual abuse as a human rights issue rather than a niche tech problem.

Some best practices are visible:

- Targeted national legislation criminalising non-consensual AI sexual imagery.

- Successful prosecutions signalling deterrence.

- Faster notice-and-takedown regimes paired with survivor assistance.

But these remain exceptions.

What experts say must change—now

Across regions, a consensus is forming around several concrete steps:

- Explicit criminalisation of non-consensual AI sexual imagery, regardless of “realism.”

- Fast-track takedown and evidence preservation obligations for platforms.

- Safety-by-design requirements for AI undressing tools, AI developers, including watermarking and abuse detection.

- Funded survivor support: legal aid, counselling, and digital hygiene assistance.

- Public education to counter stigma and victim-blaming.

Legal scholars stress that protecting victims does not require sacrificing free expression. The line, they argue, is consent: expression ends where sexualised coercion begins.

What is at stake

If governments and platforms continue to lag, experts warn of a chilling effect on women’s participation in digital and civic life. Public timelines will become hunting grounds. Silence will look safer than visibility.

A rights-respecting global response—rooted in consent, accountability, and survivor support—could still turn the tide. But time matters. The technology is evolving daily. The harm is compounding hourly.

The question is no longer whether AI undressing tools are abused. It is whether the world is willing to act as if it is.

AI undressing tools, AI nudification abuse, deepfake sexual abuse, non-consensual AI images, technology-facilitated sexual violence, image-based sexual abuse AI, AI generated fake nude images, women targeted by AI deepfakes

Discover more from

Subscribe to get the latest posts sent to your email.